Introduction

Organizations investing in AI face a critical challenge: despite their remarkable capabilities, Large Language Models (LLMs) remain disconnected from the most valuable enterprise data sources - databases, documentation repositories, communication platforms, and specialized business tools.

The Model Context Protocol (MCP), an open standard developed by Anthropic, addresses this challenge by providing a universal framework for connecting LLMs to enterprise systems. By standardizing these connections, MCP dramatically reduces integration costs and complexity while unlocking the full potential of AI assistants across the organization.

Who is Anthropic?

Anthropic is an AI safety and research company that has developed a family of LLMs named Claude as competitors to OpenAI’s ChatGPT, Google’s Gemini, etc. The company is one of the major frontier AI labs alongside OpenAI, Google DeepMind, DeepSeek et al.

Anthropic is building MCP as an open-source project and ecosystem.

The Problem MCP Solves

Today’s LLM tools face several critical challenges:

Data Isolation: LLM tools don’t natively have access to databases, documentation, emails, and other essential systems where your organization’s knowledge lives.

Custom Integration Overhead: Each organization using LLM clients has to build custom code to connect to their external systems. This creates redundant work across the industry.

Lack of Standardization: Even within a single organization, each integration requires custom APIs and connectors, creating a complex web of unique connections that are difficult to maintain and scale.

The result is that organizations spend valuable engineering resources building and maintaining these connections rather than focusing on their core business problems.

What is MCP?

The Model Context Protocol (MCP) is an open standard protocol that enables seamless integration between LLM applications and external data sources and tools. Think of MCP like a “USB-C port” for AI applications—it provides a standardized way to connect AI models to various data sources and tools. Just as USB-C is a universal standard for connecting devices, MCP standardizes how applications provide context to LLMs.

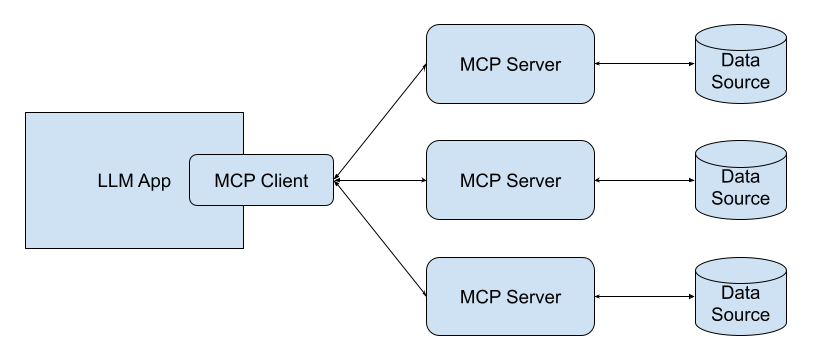

At its core, MCP implements a client-server architecture where host applications (clients) can connect to multiple servers. This standard protocol allows AI systems to interact with your data and tools in a consistent way without requiring custom code for each new connection.

As illustrated in Figure 1 below, MCP serves as an intermediary layer between LLM clients (like Claude or ChatGPT) and various data sources (databases, document repositories, business applications). Rather than building custom connections between each LLM and each data source, organizations implement MCP servers that expose their data systems through a consistent interface. This standardized approach means that any LLM client that speaks the MCP protocol can immediately access any data source with an MCP server implementation, dramatically reducing integration complexity.

Current Status

MCP was released in November 2024 and so is still in early stages of adoption. However, OpenAI has just announced that they will be supporting MCP across their products which should help accelerate adoption and maturity. MCP is an open standard so having more eyes on the project will only help it become better.

Key Benefits of MCP

The Model Context Protocol delivers several significant advantages for organizations looking to integrate LLMs with their data systems:

Build Once, Use Everywhere: Tools built with MCP can be used by any LLM client, eliminating redundant development. For example, a Microsoft SQL Server connector built using MCP could be immediately used by any company without having to build their own integration.

Unified Protocol: Integrating a LLM client with one MCP server means potential access to multiple tools and services. Once a LLM client supports the MCP standard, it can connect to any MCP-compatible server.

Dynamic Extensibility: MCP servers can be dynamically added to LLM clients without code changes. This means organizations can extend their AI capabilities by simply configuring new MCP servers as they become available, often requiring just a client restart. This flexibility allows businesses to rapidly adapt their AI systems as new data sources become relevant.

Existing MCP Solutions

Servers

Pre-built MCP servers already exist for popular enterprise systems like Google Drive, Slack, GitHub, Git, Postgres, Puppeteer, and many more. Organizations can quickly connect their LLMs to these systems without building custom integrations.

Clients

A lot of the current MCP clients are developer tools, but the Claude Desktop App supports MCP and, with the recent announcement from OpenAI, their tools will soon support MCP as well.

Security Considerations

While MCP provides a standardized way to connect LLMs to various data sources, security controls for these connections are still evolving. Currently, MCP implements a basic security model where servers maintain control over their own resources, creating clear system boundaries that don’t require sharing API keys with LLM providers.

However, businesses should be aware that MCP’s security framework is still in early stages of development. Companies can implement their own security controls in MCP servers, but comprehensive enterprise-grade authentication and authorization mechanisms are still developing.

For now, MCP is primarily focused on local connections, with work in progress for remote servers that would require more robust security measures. As MCP adoption grows, businesses will need to actively participate in shaping security standards that meet their specific compliance requirements and risk tolerance levels.

Conclusion

The Model Context Protocol represents a significant step forward in addressing one of the biggest challenges in the LLM space: connecting powerful AI models to the systems where your data lives. By providing a standardized framework for these connections, MCP eliminates redundant work, reduces complexity, and enables organizations to focus on using AI to solve their unique problems.

As an open standard, MCP encourages collaborative development and wider adoption, creating a more integrated and capable AI ecosystem for everyone.

Additional Resources

- MCP Website

- The official MCP website with more information about the protocol and how to get started.

- Applied Model Context Protocol (MCP) In 20 Minutes

- A helpful video that walks through creating a MCP client to connect to a MCP server. It also shows how tools are generally used by LLMs which gives some contrast to how MCP will help.

- MCP Security and Trust & Safety Spec

- The MCP security spec as of March 26, 2025.

- Example MCP Security Discussion

- A discussion about OAuth and its role in MCP servers.